English

Chinese

Introduction

This is the UltraSense Systems excerpt of my CES automotive trip report. I am researching the latest automotive tech for a university project. The UltraSense Systems meeting room located in the West Hall of the Las Vegas Convention Center, in the Tech East Zone. This along with part of the North Hall was where most of the automotive technology was located and the TouchPoint HMI controller message below.

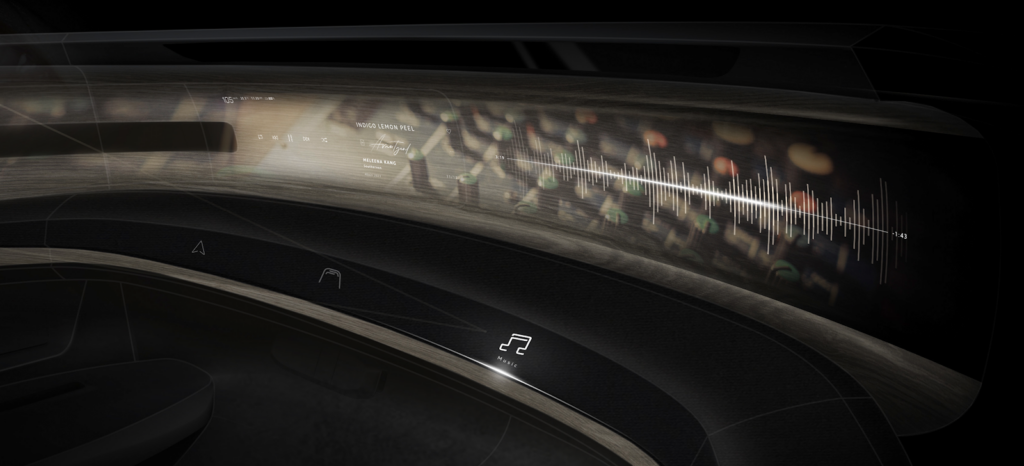

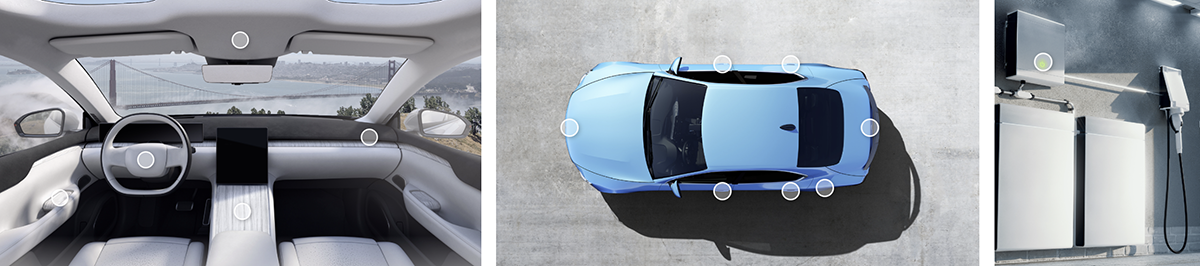

UltraSense is a pioneer of InPlane technology to enable SmartSurface HMI (Human Machine Interface) experiences focused on automotive applications. UltraSense enables highly reliable “touchpoints” of interaction, in places that make sense, presenting interfaces when you need them, and can be hidden when not applicable, then lit or presented as need as relevant to the current tasks in the car. This experience design enables simpler interactions, and for the driver / end-user, the ability to interface with the needed controls quickly and easily. These touchpoints are located beyond the touchscreen, physically where you may need them in the automobile, on the steering wheel, center console, arm rest, door, overhead as well as on the exterior of the vehicle, for door access, trunk, frunk, and e-Lid and displacing the traditional big and bulky button switch.

The under metal UltraForce Touchpoint solution was in the Asahi Kasei AKXY2 concept car, I saw in the North Hall. See more here https://ultrasensesys.com/go/akxy2/

In-Plane Thin

Solid-state electronics enables the thinnest possible touchpoints, reducing traditional and legacy switch interface modules as much as 70% in some instances and as a result enabling touchpoint interactions in places that were physically impossible before. In the arm rest or the center console, the retractable cup holder cover was just a lid, but now that real estate could be utilized to add touchpoints.

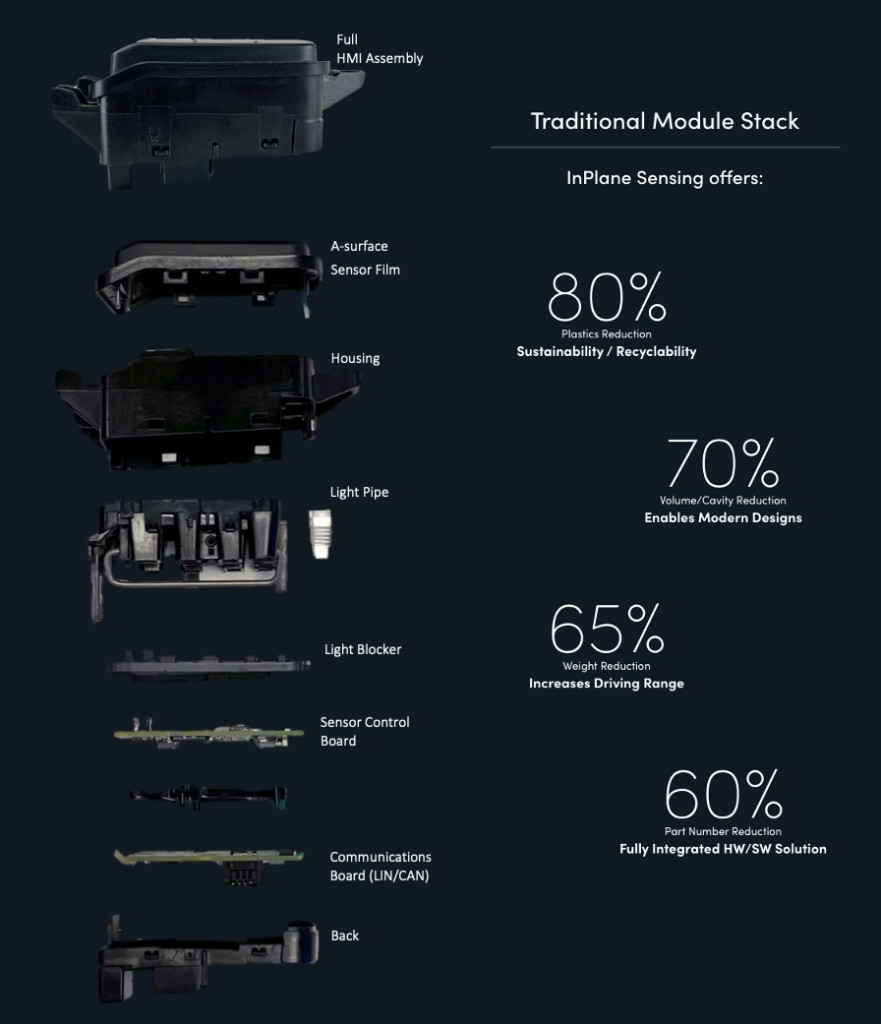

A traditional module stack can be made up of several parts, the sensor board electronics, the capacitive ITO film, the feedback components including LED and lighting controller and light pipe, the communications board that support LIN and CAN automotive network protocols.

With InPlane, the “A-Surface” or the top material that you touch could be plastic, glass, metal, wood or leather of the center console, steering wheel, door panel/ armrest. And the UltraSense module is “laminated” to the backside of the A-surface. The TouchPoint HMI controller/module can support:

- Sensor Fusion: Multi-Mode touchpoint sensing

- MCU processing: The touchpoint is at the edge of the automotive network, a low power targeted HMI controller that supports the AI / Machine Learning algorithms, and storage of experience profiles and settings deliver no-latency control

- Feedback Control: driving Illumination and effects, Audio, and Haptics

- Secure Connectivity: options support direct integration of LIN and CAN protocols

ReInventing the “TouchPoint”

The UltraSense touchpoints are solid-state semiconductor electronics: electronic equipment using semiconductor devices such as transistors, diodes and integrated circuits (ICs), and have no moving parts, and replaces the classic mechanical switch that is in the vehicle. Solid-state benefits include higher- reliability with no moving parts to break, and can be seamlessly integrated into large surfaces, which will be better for hygienic, anti-microbial environments, but the biggest impact for the automaker is the freedom in design, the ability to offer visually appealing automotive interiors and exteriors, a modernist design, clean lines, and interfaces that can present themselves when needed and these touchpoints appear where most ergonomically desirable and accessible.

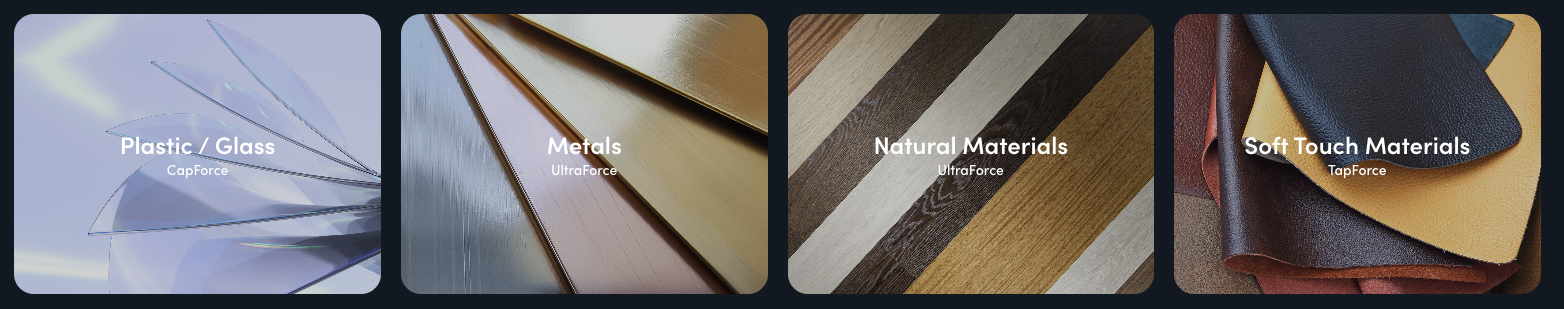

TouchPoint operates across the broadest range of materials

UltraSense technology enables touchpoint in the broadest range of materials, operating through traditional plastic and glass, but was amazing were demonstrations operating through various types of metal, wood and other premium materials such as leather and soft fabrics. This enables automotive designers the creative ability to use premium and sustainable materials that were previously technologically restricted to plastics as capacitive technology is the most common technology, and most often implemented in plastic.

High Accuracy TouchPoints

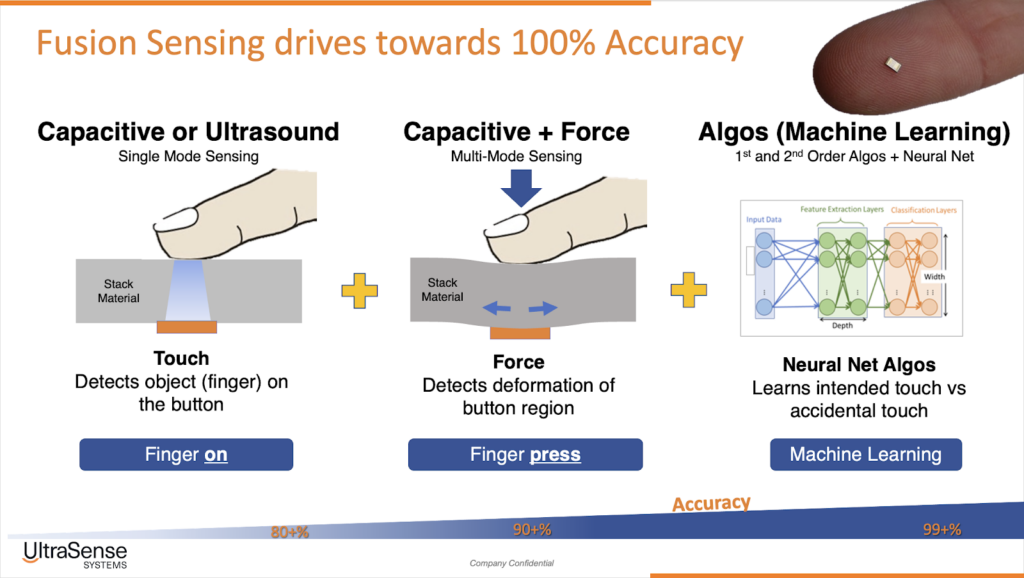

A key element to delivering great HMI experiences are about offering precision touch accuracy. Basically, it responds when you intend to touch it and doesn’t false trigger when there are “un-intended” touches. UltraSense’s technology has several advantages here.

Using the right primary touch technology such as Capacitive (plastics) and Ultrasound (metals, wood) you can detect a finger touch.

Multi-Mode Sensing

UltraSense multi-mode sensing then adds Force Sensing technology to the primary touch tech, in plastic this would be CapForce and UltraForce in metal, and wood. This “Dual-mode” sensing uses two senses to increase the accuracy, much like if you were reaching out for a can of soda, you can see the can and touch the can to know it’s really there, dual-mode sensing reduces the illusion that something may be there but it’s not.

AI Machine Learning Algorithms

In every UltraSense Touchpoint is a tiny edge processor MCU with enough power to manage edge the touch interactions, for example, on that processor are housed Artificial Intelligence, Machine Learning algorithms, these are supervised learning algorithms that can incorporate touchpoint and networked touchpoint intelligence, that are used to train the touchpoint what represents intended and un-intended activations (false triggers, accidental activations). Good ML operates on data, and the more data, as when using sensor fusion / multi-mode sensing technology, there offers greater insight data into the touch interaction at every millisecond.

For example, every Touchpoint node has a QuadForce touch sensor. That includes four times the information of data from the traditional force sensor. This can be used to better target touch when pressing slightly off target. (See UltraSense’s force touch blogs for more details)

With more data on the touch sensing along, the ML algorithms can better detect the actual interaction, and with the supervised learning, the interactions can be classified and determine the touch as an intended or un-intended touch. It gets even better when looking at a system of touchpoints, the adjacent touchpoints can be networked

DATA – DATA – DATA

If “Data is the new Oil”, then in SmartSurface HMI, UltraSense offers a rich data mine for driving amazing user experiences. The key takeaway is that the data that an UltraSense TouchPoint collects represents better information to make intelligent decisions. With ML supervised learning, the system algorithms can be trained to reject the accidental in-intended touch occasions and deliver the experience that was intended. That is a formula for high accuracy, using multi-mode sensing provides more data, and intelligently processing that data with their neural net algorithms can deliver the most robust touchpoint accuracy on the road to achieving 100% accuracy.

Fix-In-Post capability

Like in filmmaking, fixing in post-production is a standard practice for movies, and editing is a key capability to delivering amazing experiences. In touchpoint sensing, UltraSense’s focus on Experience Design combined with AI expertise, they have developed an open architecture system to enhance the user experience.

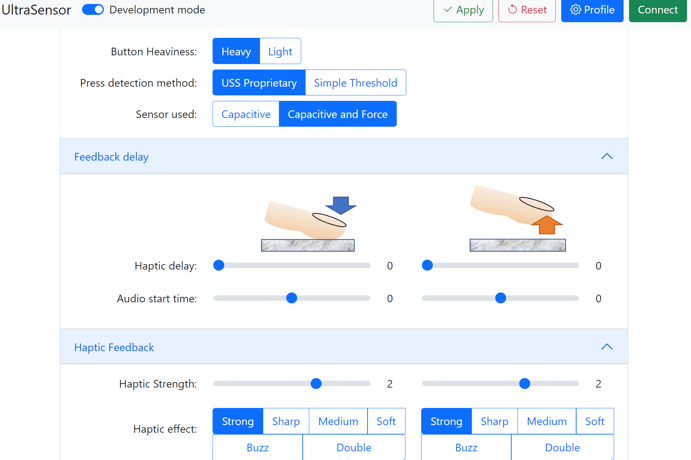

UltraStudio 2.0 Experience Design Tool

To make a solid-state touchpoint feel like a mechanical switch, there’s a lot of sensory control and precise timing of the feedback for lighting, audio, and particularly haptics. UltraSense has released an open architecture tool to enable better user experience design, with the goal to provide better orchestration of HMI events and tune the touch and feedback controls, including lighting and effects such as heartbeat pulsing and custom diming waveforms. It includes upload of custom audible feedback and of note is the fine control of touch to haptic events.

For haptic interfacing, the OEM can load custom haptic “waveforms” ; these are the haptic user experience designers’ specifications for how the “feel” is engineered. For example, the knob clicking of a Nikon switch is very distinguishable and attributed to that brand, all Nikon cameras have that same precision and quality feel.

UltraStudio 2.0 is an open platform and shared the insertion of an accelerometer into the system to help in prototyping to measure the resultant haptic waveforms, and how the accel can measure the actual and compare to the desired, then the tool enables you to adjust the control waveform and increase/decrease the haptic strength and introduce different patterns for press vs release.

Supply Chain Perspective

Considering the supply chain perspective, any assembly with a mix of suppliers, is exposed to a higher risk of outages. UltraSense HMI controllers incorporate the most common elements with the power of one and integrated at the touchpoint, a single AEC-Q100 certified solid-state component.

Advanced Force Technology – Simplified Designs with QuadForce

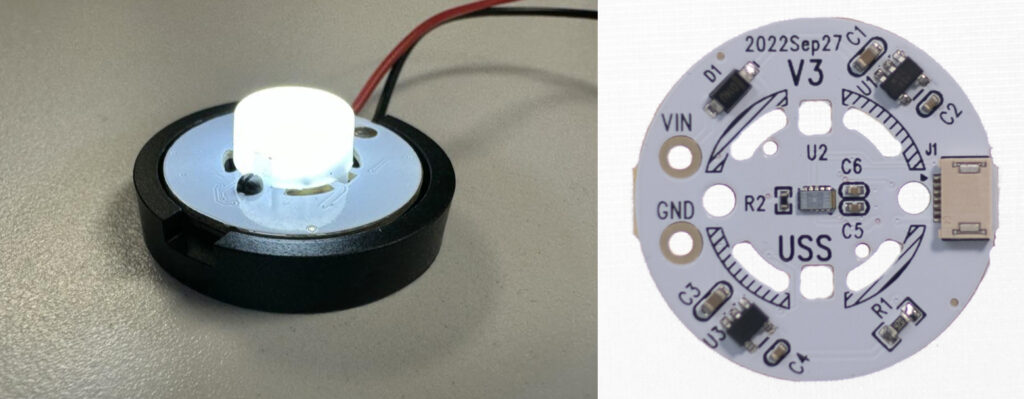

In car overhead lighting can be large cumbersome structures, but with UltraSense’s AC Piezoelectric QuadForce sensing technology, it can be used in innovative designs that result in better and more reliable product such as the standard overhead light.

The traditional light is an LED with a dome diffuser. On the printed circuit board (PCB) are the associated electronics analog and digital components to control the LED and present effects such as On/Off, attenuation & dimming, plus the traditional light has a switch that controls the LED.

With the addition of the UltraSense TouchPoint HMI controller in this solution, it simplifies the necessary components as the touchpoint can replace the lighting controller (AFE analog font end) and manage all the lighting effects, it can also ingeniously replace the need for the switch. Here they demonstrated using the QuadForce technology built into every TouchPoint HMI controller to detect XY force deflection, measuring the pressure from a press on the standard LED lens and measuring the force on the PCB, all in the same space as the light lens, eliminating the need for a separate switch, and delivering a more intuitive interface, no need pressing switches to find the right light, press the light as it is the switch.

Some automakers have learned the hard way that traditionally, this is not as easy as that sounds, they have used capacitive ITO that is vacuum sealed to the backside of the LED lens, but if not done precisely, bubbles could be present and to make matters worse, when the light is on, the LED accentuates the bubbles clearly visible when the light is on. The other issue that occurs with ITO films in the light case is that heat can cause the ITO film to fade over time and then cause the lighting to change and less clear and true.

Application Solutions & Platform Demonstrations

Tremont Lighting Solution

The innovative “Tremont” mechanical design application with the UltraSense touchpoint redefines the solution to overhead lighting and eliminates the separate switch, and removes the need for capacitive film that can bubble and fade, and eliminates the need for a separate switch and delivers a complete solid-state experience.

The benefits of an HMI controller based overhead light:

- Intuitive easily discoverable using the light as the touchpoint

- Parts & cost reduction, HMI controller enables multi-function lighting, controls dimming effects, eliminates a separate switch cost.

- High reliability solid-state components

- Compared to a capacitive ITO solution, has not additional vacuum molded ITO insertion, no air bubbles, no film fading side effects.

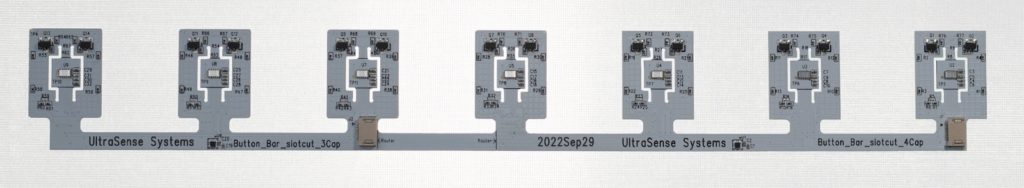

Bar Center Console Demonstration

The Bar center console demonstrator is an InPlane seven touchpoint assembly with dual color illumination, audible and haptic feedback. This is a CapForce TouchPoint HMI controller, utilizing UltraSense capacitive plus force sensing with ML (Machine Learning) algorithms to increase accuracy and remove the common accidental activations such as wiping clean. All the touchpoints are individually addressable and can be configured separately, the haptics for the +/- button pair can have a distinct waveform from the standard buttons compared that is different from the hazard button.

It connects with UltraStudio 2.0 human factors tuning software to customize haptic settings and stores them into profiles.

Human Factors (HF) testing can be performed using UltraStudio to measure individual user preferences, and the detailed settings are all matched to the subject test results.

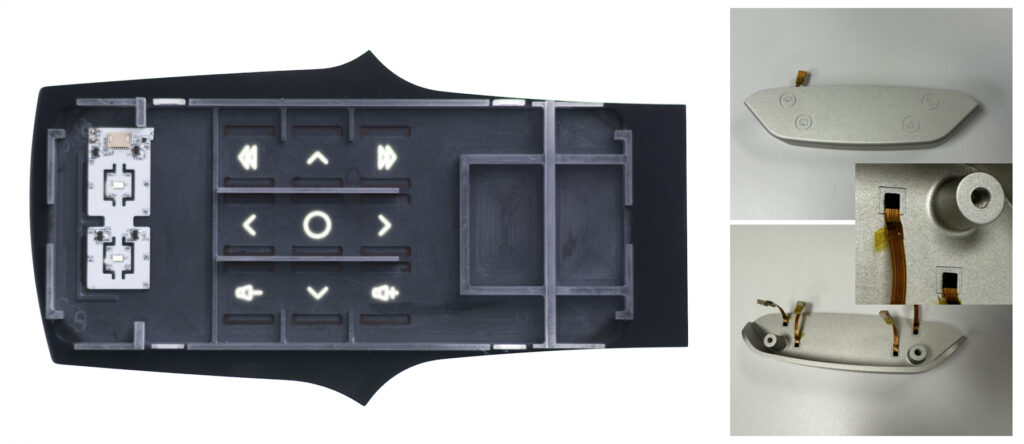

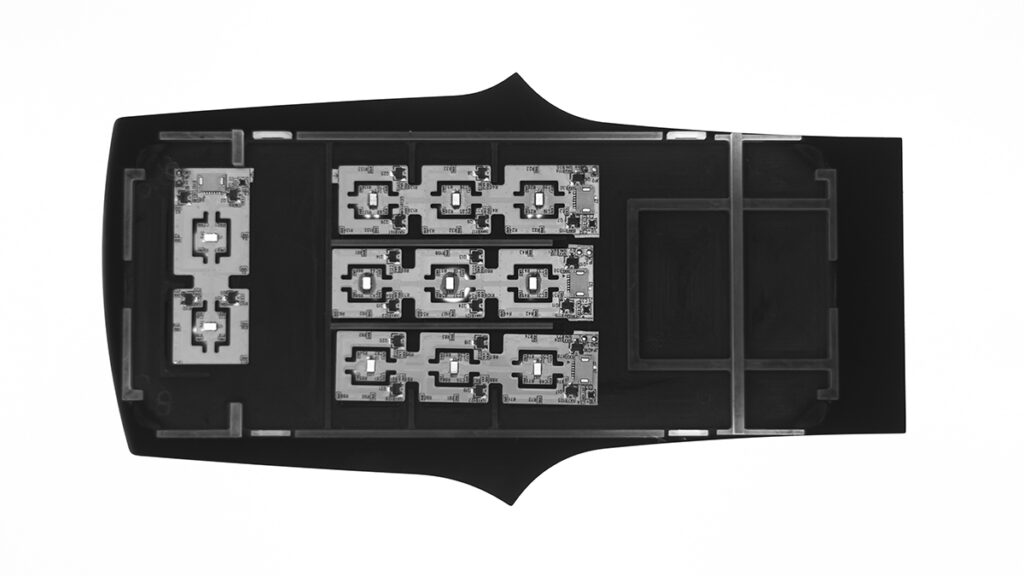

Baker Steering Wheel Demonstration

The Baker steering wheel demonstration platform puts all the technology together in an automotive steering wheel application. As a trend towards autonomous vehicles, stalks will need to be removed to enable stow-away steering wheels. InPlane sensing enables the wheel to be as thin as possible, and the ability to operate under a variety of materials from plastic to metal, wood, and soft materials are all possible with TouchPoint HMI controllers.

Baker InPlane Sensing

CapForce Touchpoint nodes laminated to the backside of the steering wheel module and UltraForce Touchpoint under metal application.

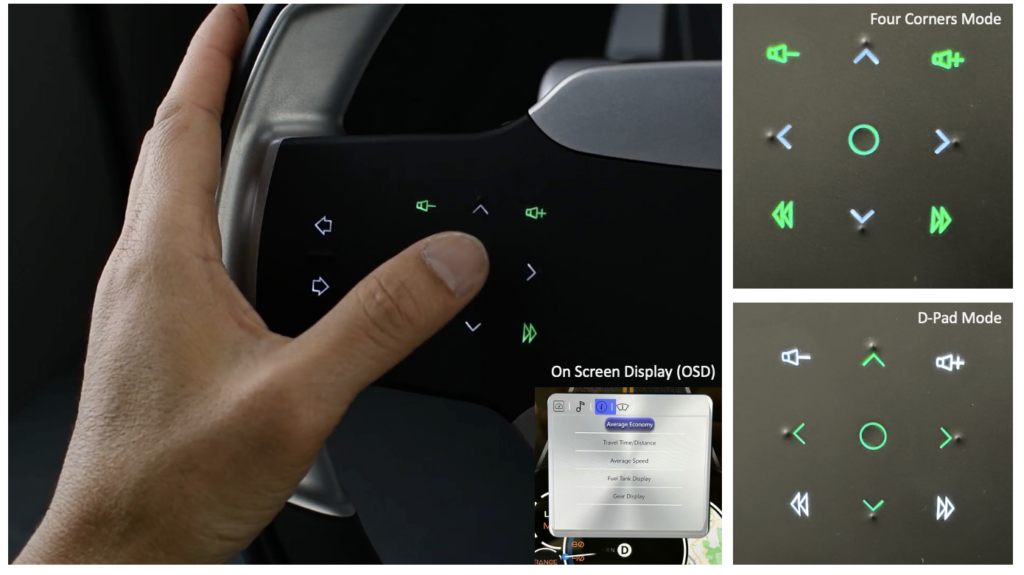

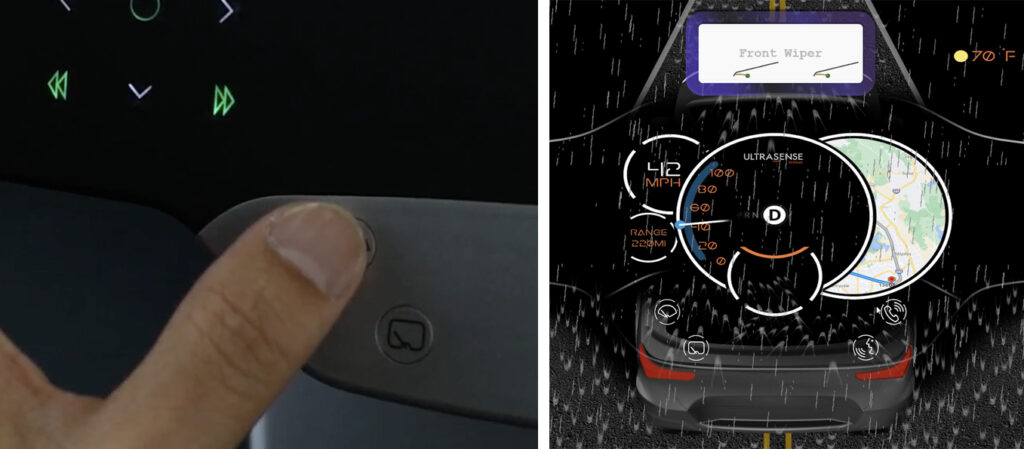

Stalk-Replacement | Solid-state Turn Signal

The left-hand button pair represents the left turn signal stalk. With the use of good design and capable and accurate sensing, it can replace all the functions of a stalk, not just a 3 second lane change function found on some shipping cars today.

Physically it uses a tactile “speed bump” marker to easily identify by feel the stalk “button pair,” this blind operation allows the user to focus on the driving and not need to look down at the button. Behind the scenes, this is an example of CapForce at work, as a light touch to feel the “speed bump” does not trigger the buttons.

Next is that a stalk enables two signal functions, lane change (blink 4 times) and signal left at a stop (latch blinking, after turn the blinking ceases). With good gestures this is enabled with a press vs a long press.

Multi-Mode Direction Pad (Dpad) | Affordance

In locations like the steering wheel, where several interfaces need to be consolidated into a finite space, a good usability practice is to introduce the Dual-mode direction pad (DM D-Pad). The benefit is higher “affordance” this allows the user to be less “precise” and deliver the desired outcome.

Here the nine touchpoints are separated into two sets.

- Four Corners (default)

- D-Pad (used for operating with an On-Screen Display OSD)

Volume Control | Gestures

Volume is a frequently used function where not all touch capability is created equal. Proper settings with good gesture design provides high affordance for users to discover without training the advance functions that can be a delighter.

To many a slider may seem more capable than a volume button pair, but a good slider experience needs to have enough “segment length” to eliminate the rowing function and the physical control of the slide needs localized anchoring to have precise control. Basically, using your entire arm as the pointer vs anchoring your palm and locally sliding with your pointer finger the local anchor will deliver more precise control.

Good gesture and good UX can deliver better control than a basic slider that has rowing and anchoring challenges. The good configuration of a button pair introduces the concept of two-speed volume adjust, the traditional single step per press and a press-n-hold gesture to deliver the accelerated experience, in this example it jumps +4. In this manner a volume control that is set from 0-20, and you want volume 16, the basic control requires 16 presses to achieve the goal, but with good gestures you can get to 16 in four vs 16 presses.

Under Metal TouchPoints

Four buttons are using UltraForce TouchPoints under 2mm of metal representing wiper f/r, voice control and phone call.

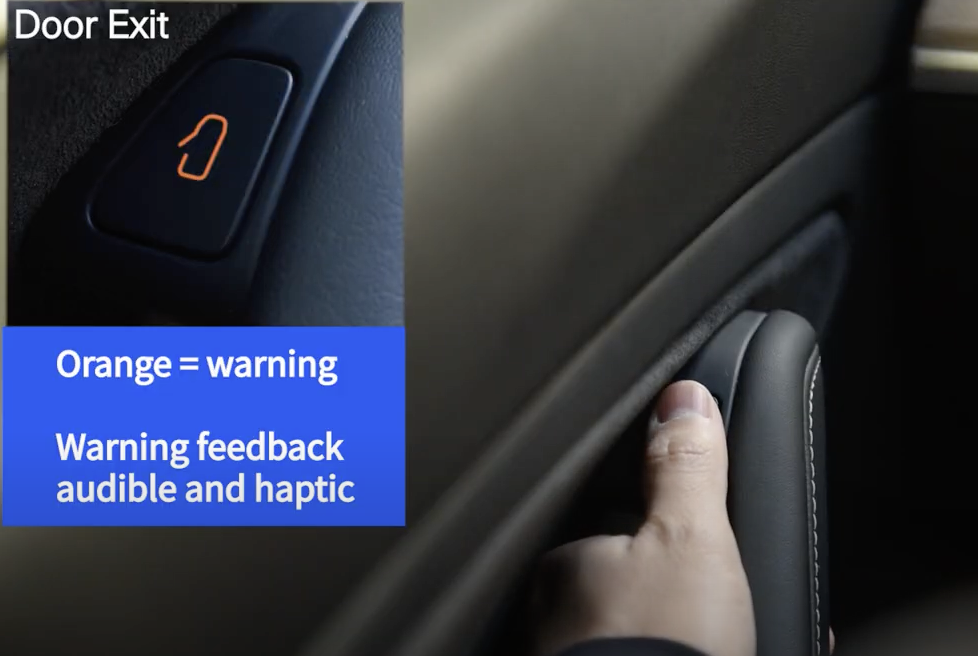

Bear Safe Exit & Optionality Demonstration

Bear demonstration represents some “thought leadership” perspective to the advantages of a good HMI controller solution. It first shows that with essentially one door exit module this HMI controller can store two profiles, a basic exit and the premium “safe exit.”

With Safe Exit the HMI controller communicates with the car’s existing Blind Spot Monitor (BSM) and uses that capability to identify if there is a car or bicycle hazard if opening the door.

The TouchPoint HMI’s internal processing, and no-latency haptics deliver a responsive experience that does not tax the main MCU. TouchPoint’s internal profile capability enables simple state setting instruction from the vehicle’s ECU.

Manufacturing simplicity is achieved as one part is installed and it is software configurable. SafeExit can be configured at the factory, at the dealership, and even through an OEM portal after the car purchase.

This experience is demonstrated in this video:

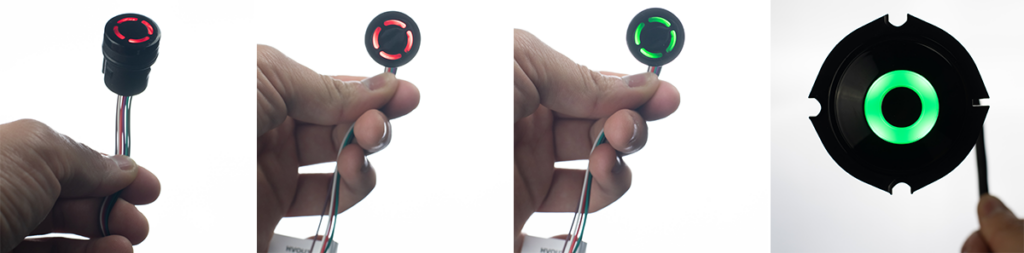

SSI Demonstrations

Plug-n-Play solid state interfaces are ready-to-roll implementations that come pre-calibrated and it’s a drill the hole and connect to get going. These are built to survive the external outdoor elements and are AEC-Q100 certified electronics. These are in production and have been used in industrial & solar applications as well as in automotive e-Lid solutions.

Libra Concept ML Demonstration

Libra is a codename for a concept demonstration showing the power of Artificial Intelligence -Machine Learning to implement a dual mode trunk badge touch interface. A dual mode interface can be used on a vehicle by having the top touchpoint open the tailgate’s glass, and the bottom touchpoint open the entire tailgate with the glass opening. The message is to demonstrate that on the road to 100% accuracy, good ML can enhance the experience to accurate touches and eliminate accidental, but now with the intelligence a single QuadForce sensor can measure, a single touchpoint can serve the role of two buttons.

Visit the UltraSense YouTube Channel to see more.

LG Velvet 2 Smart Phone Demonstration

Touch under metal solid-state with audible and haptic feedback

Three touchpoints, a volume +/- button pair and display on/off

The volume button pair controls feature press-n-hold gesture with two-speed volume adjust, a single or a jump by five fast adjustment.

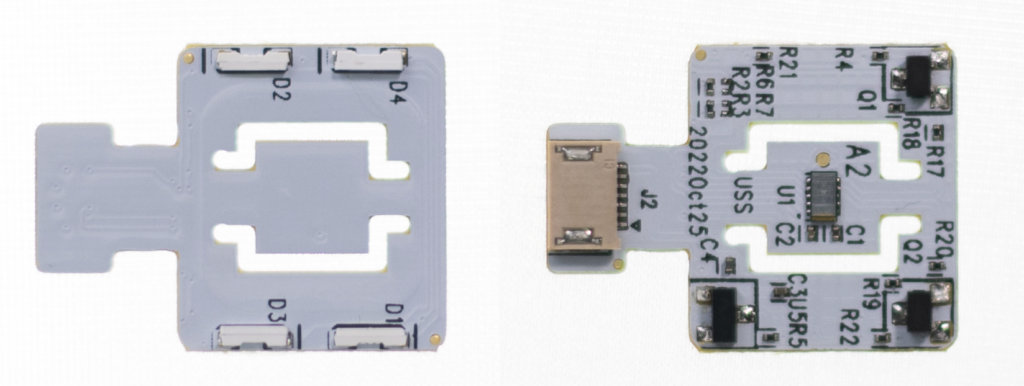

Norris TouchPoint Module (Part of the Development & Evaluation Kit)

A single touchpoint can be implemented as a single PCB touchpoint as illustrated in this component. These are images of the topside (lamented to back of A-surface) and the bottom side of the same module. The module topsides can be simply laminated with VHB adhesive (same as used in exterior automotive logo badges) and connect and go.

UltraSense Systems | TouchPoint Development Kit

To get started with the TouchPoint solutions you can pickup a TouchPoint Development and Evaluation Kit. It includes a complete configured touch sample to baseline your setup and use all the features and settings, then the development components with single touchpoint modules to laminate to your A-surface and begin testing. Visit the website https://ultrasensesys.com/contact/ and get an account on the customer portal to learn more.